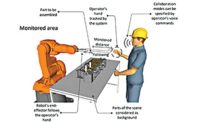

COLLEGE STATION, TX—Humans and robots are working closer than ever on assembly lines. To study how these interactions can impact human trust in robots during production tasks, a team of researchers at Texas A&M University are using brain imaging.

Capturing human trust levels has been difficult in the past, due to subjectivity. The current study focuses on understanding the brain-behavior relationships of why and how an operator’s trusting behaviors are influenced by both human and robot factors.

“While our focus so far was to understand how operator states of fatigue and stress impact how humans interact with robots, trust became an important construct to study,” says Ranjana Mehta, Ph.D., an associate professor of industrial and systems engineering and director of the NeuroErgonomics Lab at Texas A&M. “We found that as humans get tired, they let their guards down and become more trusting of automation than they should. However, why that is the case becomes an important question to address.”

Using functional near-infrared spectroscopy, Mehta and her colleagues captured functional brain activity as people collaborated with robots. They found faulty robot actions decreased the operator’s trust in the robots.

That distrust was associated with increased activation of regions in the frontal, motor and visual cortices, indicating increasing workload and heightened situational awareness. The same distrusting behavior was associated with the decoupling of these brain regions working together, which otherwise were well connected when the robot behaved reliably.

According to Mehta, this decoupling was greater at higher robot autonomy levels, indicating that neural signatures of trust are influenced by the dynamics of human-autonomy teaming.

“What we found most interesting was that the neural signatures differed when we compared brain activation data across reliability conditions (manipulated using normal and faulty robot behavior) vs. operator’s trust levels (collected via surveys) in the robot,” explains Mehta. “This emphasized the importance of understanding and measuring brain-behavior relationships of trust in human-robot collaborations, since perceptions of trust alone is not indicative of how operators’ trusting behaviors shape up.”

The next step is to expand the research into a different work context, such as emergency response, and understand how trust in multi-human robot teams impacts teamwork and task work in safety-critical environments. According to Mehta, the long-term goal is not to replace humans with autonomous robots, but to support them by developing trust-aware autonomy agents.

“This work is critical, and we are motivated to ensure that humans-in-the-loop robotics design, evaluation and integration into the workplace are supportive and empowering of human capabilities,” says Mehta.